Faster pipelines with Knapsack Pro by parallelizing Cypress tests on GitLab CI

When the runtime of our build pipeline had reached 20 minutes on average for each commit on each Merge Request, we knew we needed a solution to speed things up. We wanted to optimize everything we could within the pipeline, a way of doing this was to parallelize our tests with Knapsack Pro to speed up our Cypress integration tests which would save us a sizable amount of time.

What is our setup?

Our team is working on Kiwi.com, which is a travel company selling tickets for flights, trains, buses and any other kinds of transportation. More specifically, the team is responsible for the Help Center, where users can go to find articles and chat with support before, during and after their trips.

Our application is deployed in two distinct ways: as an npm package integrated into other modules within Kiwi.com as a sidebar (called sidebar), and as a standalone web application (called full-page) which is dockerized and then deployed on GKE (Google Kubernetes Engine) for our production environment and using GCR (Google Cloud Run) for staging envs .

Most of the code is shared between these units but there are some minor differences in design and major differences in flows/functionality, so they need to be tested separately.

We use GitLab for code reviews and version control and GitLab CI to run our build pipelines.

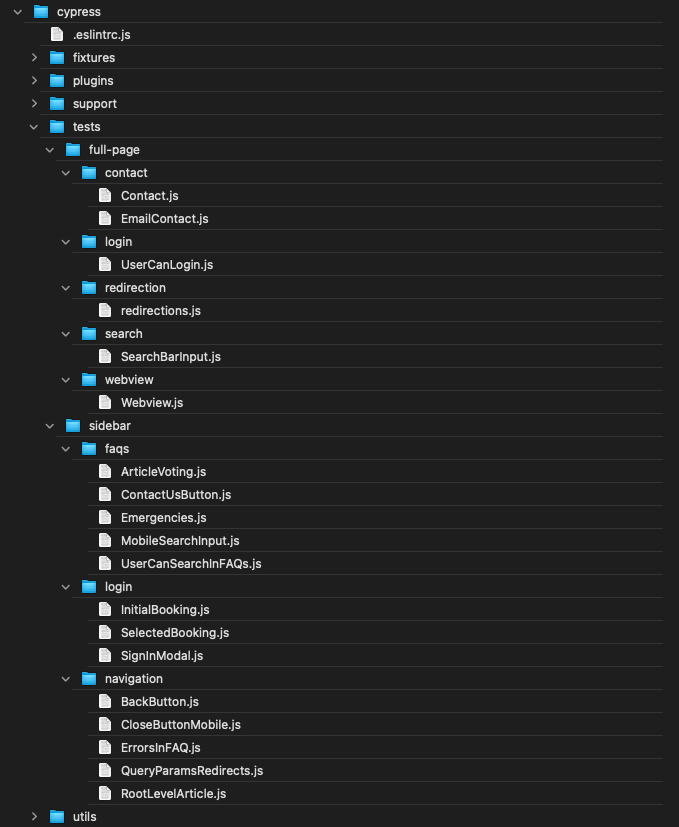

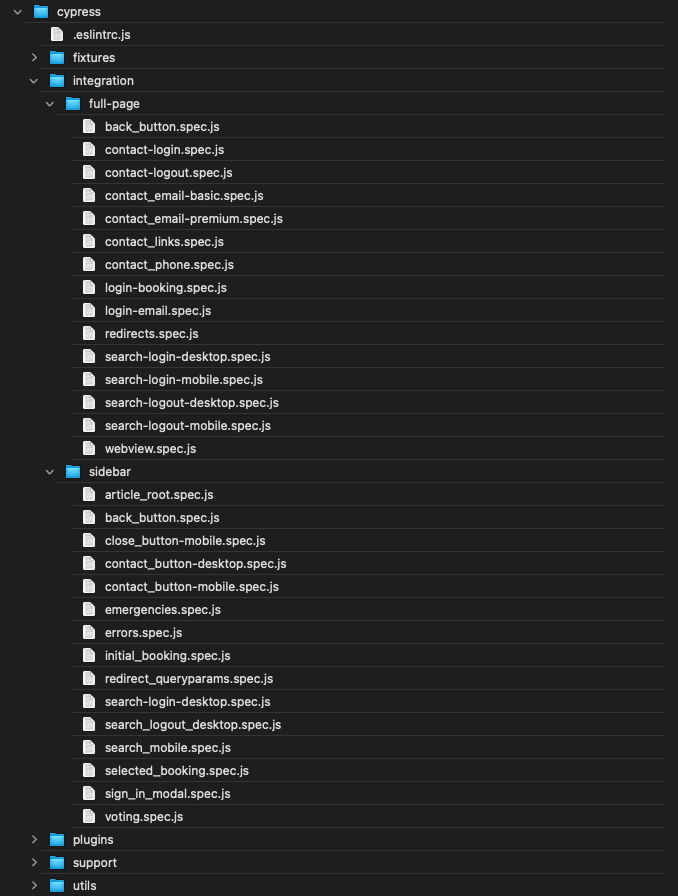

We use Cypress for end-to-end and integration testing with separate tests for both types of deployment.

What problem are we trying to solve?

We want our pipelines and tests to run faster but not spend too much time nor money on doing it, so running each test on its own server is probably not the best way to go, not to mention the overhead of starting up the runners and checking out the source code.

If our pipelines are slow, developers could forget that they had something running in the background and jump between tasks or worse, somebody else merges code into the main branch and the pipeline has to be run again…

If our pipelines are fast, then we have to wait less while they are running, after applying some of the changes suggested during code reviews and to get hotfixes out to production if necessary.

Manual splitting of Cypress tests

After seeing that running tests in series takes extremely long, we tried to split some areas up and run them on separate Cypress runners and this worked for a while. But, as the tests’ size grew asymmetrically, we had to shift around which suites should run together continuously.

What is Knapsack Pro?

Knapsack Pro is a tool that helps split up test suites in a dynamic way across parallel CI runners to ensure that each runner finishes work simultaneously.

Its name comes from the “knapsack problem”, a problem in combinatorial optimization, to figure out how to take things (test suites) with certain attributes (number of tests, time it took on average to run, etc) and determine how best to divide them to get the most value (all CI nodes are running tests from boot until shutdown and they all finish at the same time).

It comes with a dashboard to see the distribution of test subsets for each parallel run of CI runners, which is useful when splitting test suites or to see if something is going wrong with the runs. The dashboard also suggests if you’d benefit from less or more parallel runners as well.

The founder of Knapsack Pro, Artur Trzop has been helping us proactively, sometimes noticing and notifying us that something went wrong with our pipeline even before we did. Whenever we had an issue, we could just write to him and we would figure out a solution together.

Plugging in Knapsack Pro

To try out Knapsack Pro, all we did was follow the docs and afterward merge all separate Cypress jobs into one and set up some reporterOptions in Cypress to ensure that we’ll collect all of the test results as JUnit reports from all the parallel runs.

Here’s a short example of our full-page test jobs before the conversion:

.cypress_fp: &cypress_fp

stage: cypress

image: "cypress/browsers:node14.15.0-chrome86-ff82"

retry: 2

before_script:

- yarn install--frozen-lockfile --production=false

artifacts: # save screenshots as an artifact

name: fp-screenshots

expire_in: 3 days

when: on_failure

paths:

- cypress/screenshots/

fp contact:

<<: *cypress_fp

script:

- yarn cypress:run-ci -s "./cypress/tests/full-page/contact/*.js"

fp login:

<<: *cypress_fp

script:

- yarn cypress:run-ci-s "./cypress/tests/full-page/login/*.js"

fp search:

<<: *cypress_fp

script:

- yarn cypress:run-ci -s "./cypress/tests/full-page/search/*.js"

# etc ...Here is the new full-page test job after the conversion:

.cypress_parallel_defaults: &cypress_parallel_defaults

full-page:

stage: cypress

image: "cypress/browsers:node14.15.0-chrome86-ff82"

retry: 0

parallel: 4

before_script:

- yarn install--frozen-lockfile --production=false

script:

- yarn @knapsack-pro/cypress --record false --reporter junit --reporter-options "mochaFile=cypress/results/junit-[hash].xml"

after_script: # collect all artifacts

- mkdir -p cypress/screenshots/full-page && cp -r cypress/screenshots/full-page $CI_PROJECT_DIR

- mkdir -p cypress/videos/full-page && cp -r cypress/videos/full-page $CI_PROJECT_DIR

- mkdir -p cypress/results && cp -r cypress/results $CI_PROJECT_DIR

# all the copying above is done to make it easy to browse the artifacts in the UI (single folder)

artifacts: # save videos and screenshots as artifacts

name: "$CI_JOB_NAME_$CI_NODE_INDEX-of-$CI_NODE_TOTAL"

when: on_failure

expire_in: 3 days

paths:

- full-page/

reports:

junit: results/junit-*.xml

expose_as: cypress_full-page

variables:

CYPRESS_BASE_URL: https://$CI_ENVIRONMENT_SLUG.$CLOUDRUN_CUSTOM_DOMAIN_SUFFIX/en/help

KNAPSACK_PRO_TEST_SUITE_TOKEN_CYPRESS: $KNAPSACK_PRO_TEST_SUITE_TOKEN_CYPRESS_FULL_PAGE

KNAPSACK_PRO_CI_NODE_BUILD_ID: full-page-$CI_COMMIT_REF_SLUG-$CI_PIPELINE_ID

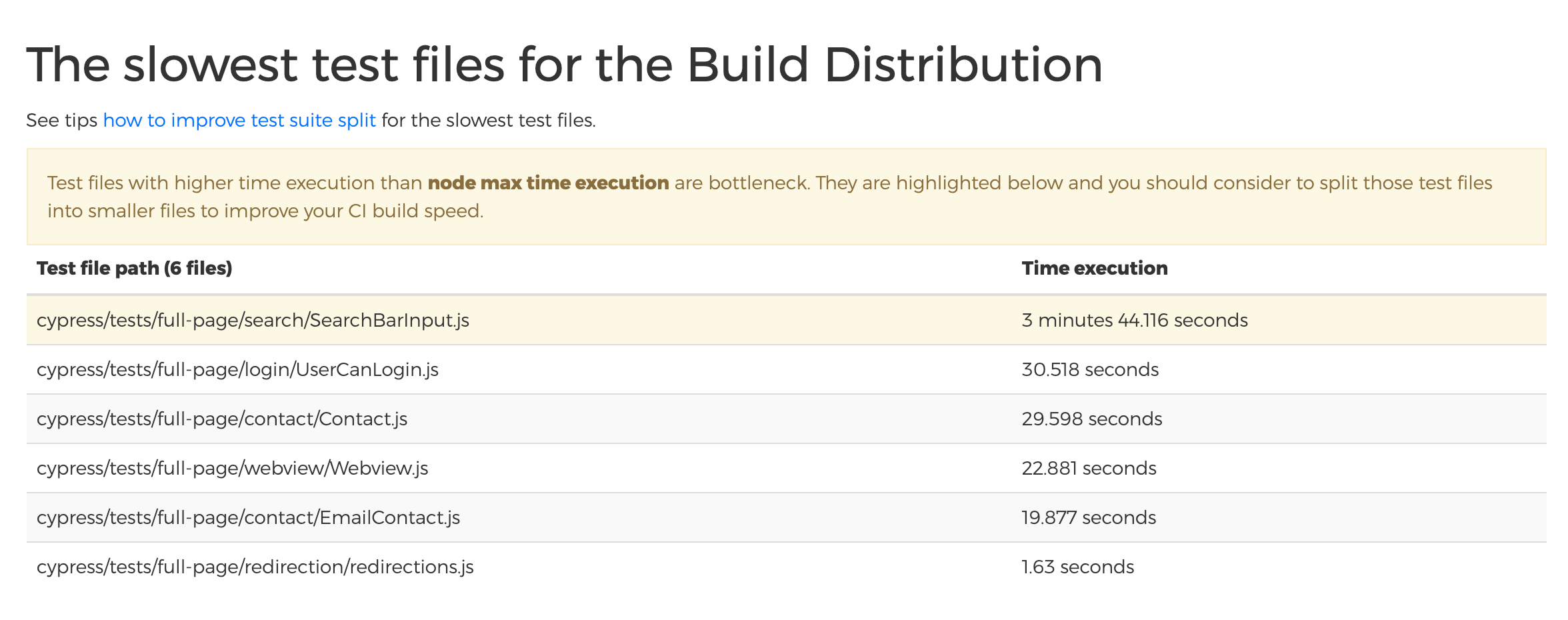

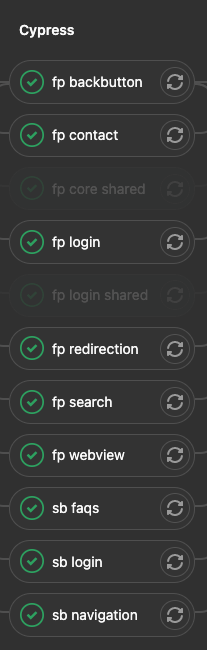

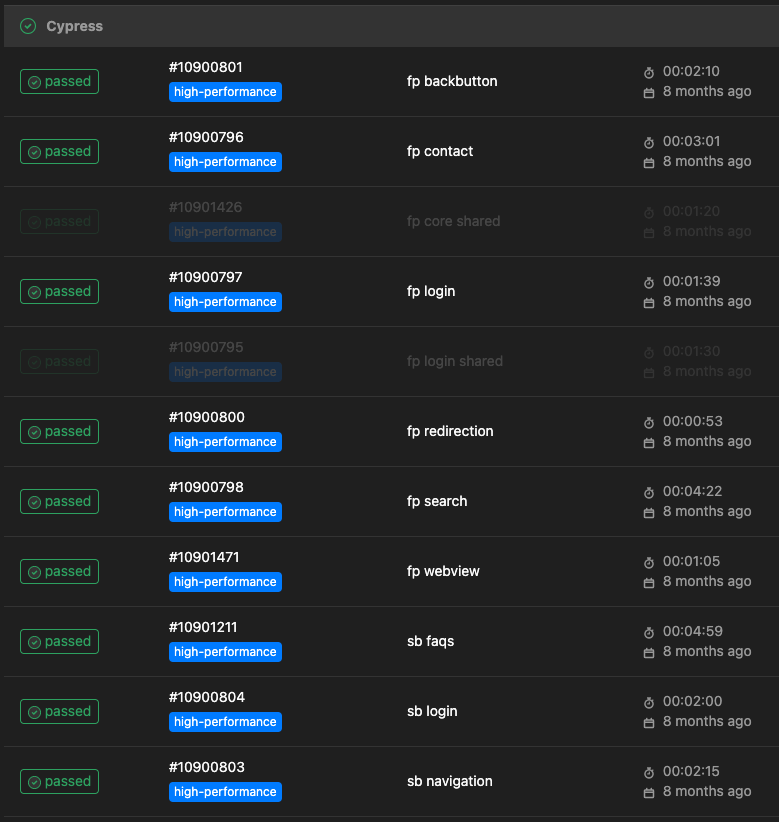

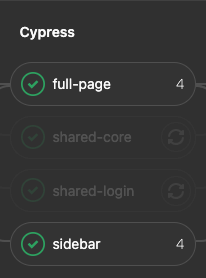

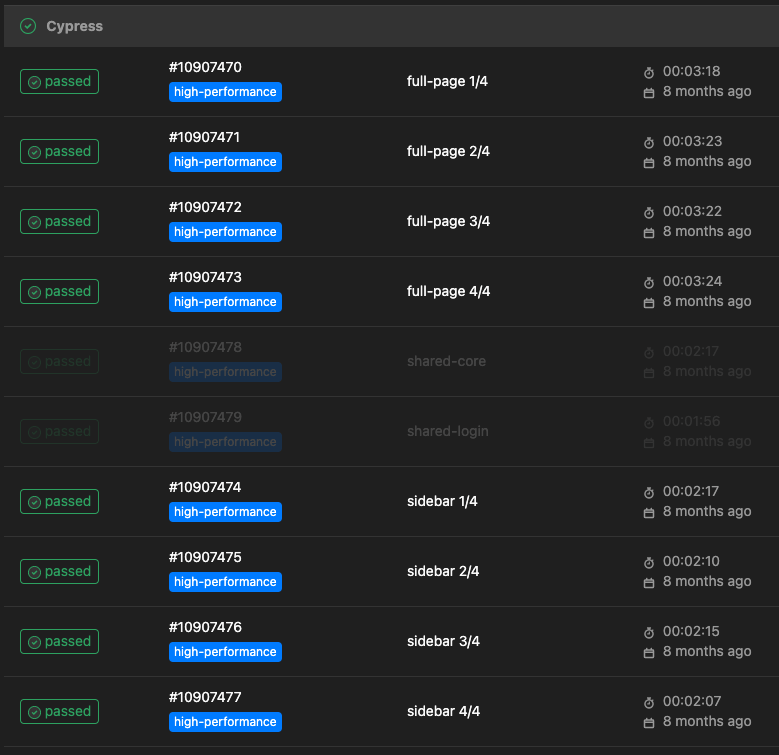

KNAPSACK_PRO_TEST_FILE_PATTERN: cypress/full-page/*.jsThis was the result we saw on the Knapsack Pro Dashboard, showing that our current separation of suites needs to be worked on to gain all the benefits of parallelization:

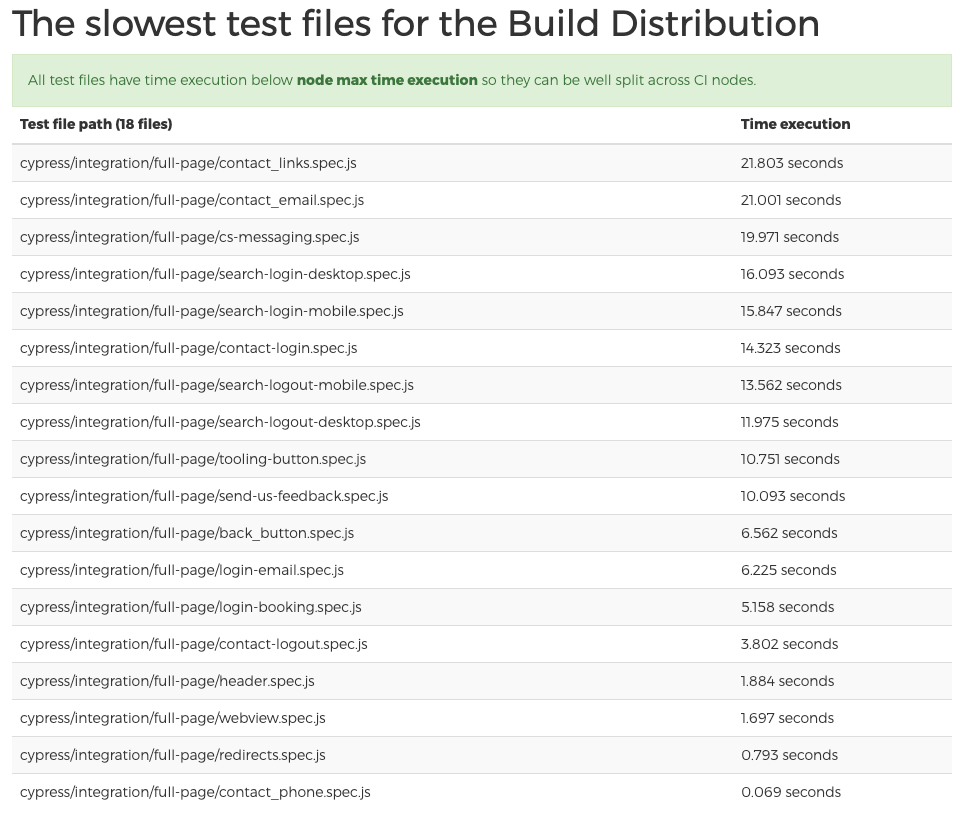

After some tweaking, splitting, and moving suites around, this is what we finally had:

It is still not perfectly balanced but with this granularity, we can utilize four runners at the same time and there isn’t too much idle time, so we’re not wasting CPU cycles.

What else can we do?

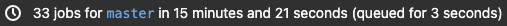

15 minutes still feels long, pipeline runtimes around 10 minutes are a good goal to reach but we have more optimization to do around build times and Docker compilation times, which are out of scope for this article. If you’re interested in reading more about how to do that, I can recommend the article Docker for JavaScript Devs: How to Containerize Node.js Apps Efficiently for more details.

Results

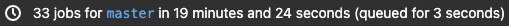

The switch to Knapsack Pro took less than a day. Most of the work afterward was to split up tasks and optimize other parts of GitLab CI. The whole process took two weeks for a single developer.

By splitting up the test suites into smaller, equal sized chunks, running tests parallelized on four CI runners (for each of our target builds) and some other general pipeline fixes (better caching between jobs/steps, only running parts of the pipeline for actual changes), we’ve managed to decrease our pipeline runtime of ~20 minutes to ~15 minutes.

When we add more test suites, Knapsack Pro will take care of sorting the tests to the proper CI runners to ensure that pipeline runtimes don’t increase if necessary.

We sped up our pipeline by 25%, or 5 minutes on average, and seeing that each of our three developers starts about 6-10 pipelines a day (depending on the workload and type of work), we’ve essentially saved between 90-150 minutes of development time a day, as well as increased morale because nobody likes to wait.